Mining your data’s value

Companies know the value of their assets. They understand the value of their operations, their heavy equipment, and the value of the people who make their operations possible. But do they know the value of their data?

Putting operational data to work can be the difference between business intelligence and business as usual. Capturing and using real-time operational data provides the panoramic view needed to put mining operations on the path to improving efficiencies and increasing production. The competitive advantage no longer lies with bigger equipment or deeper mines but leveraging data to make the smartest business decisions possible. While that is easier said than done, it is possible to achieve with the right mindset and tools.

"When traditional methods of data capture are introduced to modern technology, and the people who use them, you often have an awkward first meeting," says Polymathian product leader Steven Donaldson. "The incumbent systems for capturing and dispatching the statuses of people, equipment and underground spaces are often manual, meaning end-users have to navigate outside their comfort zones when deploying new technology."

The days of manually manipulating data are in the rear-view mirror; therefore, industry must now transition from manual processes and systems for data capture to embrace autonomous methods that facilitate more intelligent decision-making. Part of putting your data to work is using it consistently. Daily use inherently improves data quality by exposing collection gaps, process errors or inconsistencies. Conversely, data collected sporadically becomes useless at best or leads to wasted efforts and compromised decision-making. Bad data leads to bad decisions.

Data aggregated over time can compensate for inconsistencies or gaps in collection processes. But this is very different to utilising comprehensive, high-quality data that is timely and accurate enough to facilitate near- or real-time decisions. For example, most mine operators have access to quarterly fleet utilisation data that is "good enough" to decide whether to budget for additional underground equipment or not. But how many shift bosses can access performance data delivered in time to recalibrate the shift plan on-demand? Just like you shouldn't drive a car at night without headlights, you shouldn't be making critical operational decisions without the visibility data provides.

"Just like you shouldn't drive a car at night without headlights, you shouldn't be making critical operational decisions without the visibility data provides."

Let's start with the data and some of its necessary attributes.

Data access and availability

For data to be useful it needs to meet specific criteria, especially if the end goal is to use it to make critical decisions in a timely manner.

Data must be:

- Timely = data must be available within a prescribed timeframe

- Accurate = data must represent the real world in a way that is precise in time and function

- Integrated = data sources must be consolidated and accessible from a centralised location

Data capture and integration

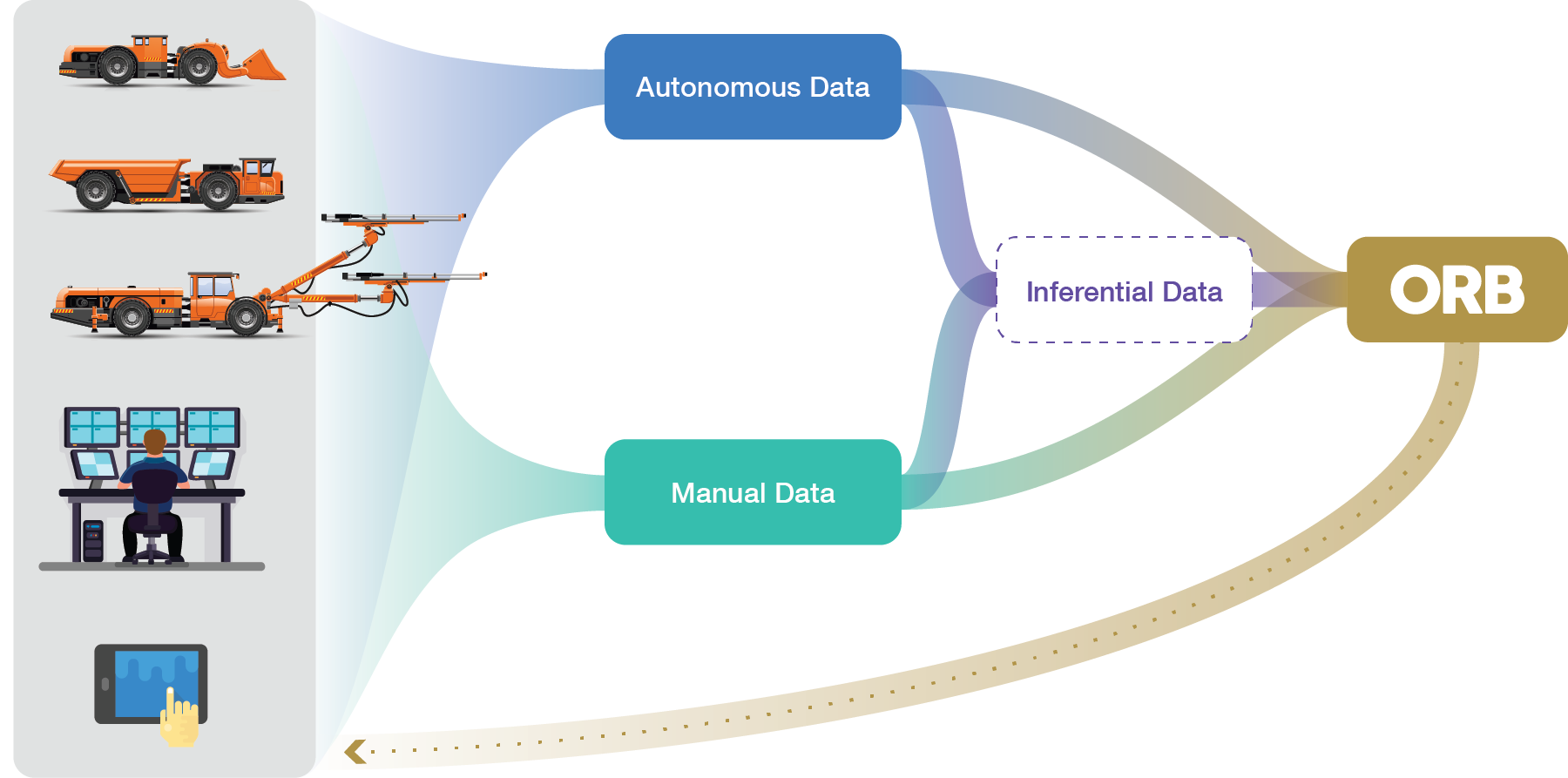

Getting information in and out of the system is paramount. A key feature of a real-time data capture and dispatch system is its ability to integrate with different sources. Tools like Polymathian's ORB Live, a short interval control module, are purpose-built to integrate seamlessly with established underground systems.

Examples of how data is ingested from different sources include:

- Autonomous data capture – this is achieved through third-party integrations

Example – integration with the bucket weightometer that automatically records tonnes tipped - Autonomous data inference – data that is automatically updated based on known data from another source or data point

Example – when a loader's status is set to maintenance but the automated tonnes capture system records unloading tonnes at a stockpile, the loader's position can be inferred, and its status updated without manual intervention. - Manual data capture at the source – this can be captured via integrated tablets or through third-party integrations

Example – jumbo operator entering bolting metrics into a tablet in their machine

Any combination of the above data capture methods at the mine source will support performance evaluation to improve productivity and plan compliance.

Putting your data to work

Technology application is a gradual evolution; therefore, companies often have a variety of systems operating at different levels of maturity and integration. In the case of ORB Live, it works as a data aggregator, collecting data from all the various sources. The outcome is an amalgamation of events representing the real-time state as a digital twin.

High-quality data provides a window into the real-time state, allowing operators to move away from manual processes and towards short interval control. An operator's team can transition from a defensive planning stance to updating plans based on actual events as they occur. This includes:

- Equipment breakdowns

- Operating faster or slower than expected

- Rehab requirements

As events are captured and integrated throughout the day, planning teams can react quickly and consistently when issues arise. Automated data capture and equipment dispatch allows for more consistent decision-making across shifts, further improving short interval control.

Related: What is short interval control?

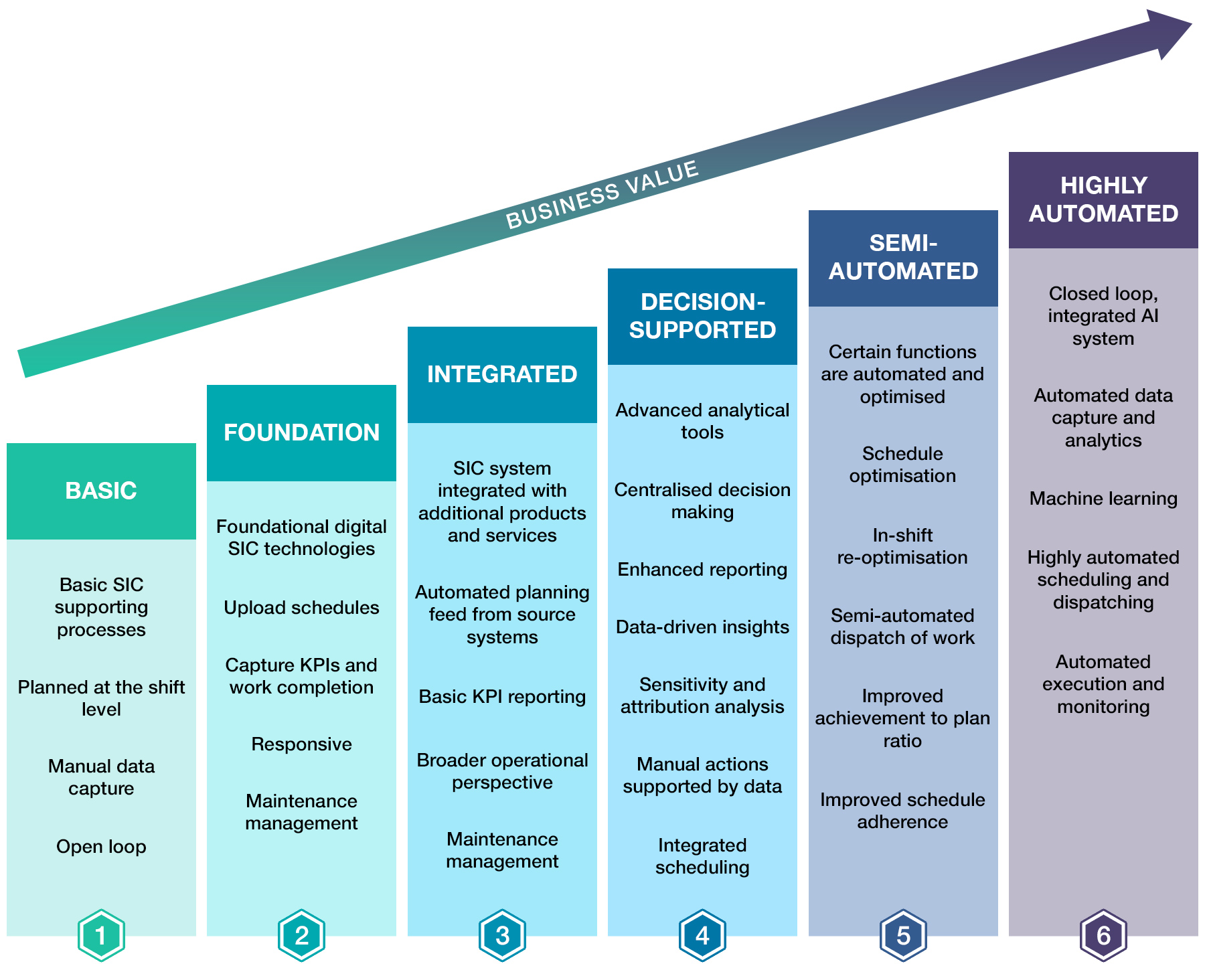

Short Interval Control System Maturity Levels - Global Mining Guidelines Group

A case for optimisation

The complicated web of interdependent networks in underground mining is the ideal environment for applying mathematical optimisation methods to support short interval control. For example, the bogging pattern in a block cave has a range of performance metrics based on how the LHDs interact, the material handling system (MHS = trucks, crushers, conveyors, etc.), and the draw points being bogged. A bogging pattern where all LHDs bog long tramming routes will likely not meet the required tonnage input to the MHS. In contrast, a bogging pattern with relatively short tramming cycles will likely exceed the MHS capacity resulting in underutilisation of equipment.

Furthermore, in a cave that uses ore passes connected to a trucking level, there is additional dimensionality in truck haulage for optimisation. Due to the restricted size of the ore passes, poorly managed loader and trucking fleet may result in full ore passes, which means loaders must stop bogging.

When the aforementioned fleet management problem is combined with geotechnical and operational constraints, it follows that some bogging patterns are superior to others. Although draw point targets largely dictate what tonnes must be bogged over a given period, there is flexibility in determining the bogging sequence. By capturing the bogging performance data and exploiting this flexibility with optimisation tools, it is possible to prescribe a bogging pattern that optimises performance, thereby significantly influencing cave productivity.

Next stop – optimisation and highly autonomous short interval control

As mine operations become more complex, using optimisation tools like ORB can help overcome cumbersome manual decision-making processes. Using data can reveal insights into productivity and efficiency performance to help understand which processes are causing delays or bottlenecks. But this can only be accomplished by harnessing the power of operational performance data.

Incorporating advances in digital twin technologies with real-time data feeds, automated and optimised mine planning, and just-in-time equipment dispatch will improve mine productivity and maximise profitability by prioritising the right work at the right locations.

Learn more

Read how Rio Tinto deployed ORB for real-time short interval control for block cave LHD extraction drive allocation and draw point dispatch. ORB demonstrated significant productivity and compliance improvements during the final production years of their Argyle Diamond Mine.

Article contributor

Steven Donaldson, Partner and Co-founder

Steven is a mathematician, software developer, and founding partner at Polymathian. He has over 10 years of experience applying operations research techniques to solve and deploy optimisation, simulation, and machine learning decision support tools to industry.

Connect with Steven on LinkedIn.